Americans’ Views Mixed on Tech’s Role in Politics

New polls give insight into what people want platforms to do - or not do - around elections

Americans are awash in polls right now. There is no shortage of organizations trying to help us understand who is winning the election or what Americans are most concerned with.

Polls are but a snapshot in time. They do not necessarily predict what will happen. James Carville and Mary Matalin discuss this difference well in the recent documentary about him, where they say they use polls to help them make strategic decisions.

Many may not realize that tech companies also use polls and surveys to understand how their users—and people—feel about their products. They use them to make decisions and track whether their efforts impact people's opinions of their platforms.

I monitor political and tech polls closely and keep a running list of questions I would ask if I could conduct one. About a year ago, I helped organize one with the Bipartisan Policy Center, States United, and the Integrity Institute, where we asked questions about who voters trusted for election information.

Most recently, my good friend Sarah Hunt, who runs the Rainey Center, invited me to submit a few questions to include in a poll they were conducting in the field.

I jumped at the chance as I had some burning topics in mind, such as:

The media’s impact on how people view artificial intelligence.

How much Americans care about censorship or content moderation compared to other tech issues.

The types of election activities were people ok with platforms engaging in and which ones they were not.

How much political content people wanted to see in their feeds.

Below are some of the key takeaways from that poll. You can see the toplines and the cross-tabs here. We also asked some questions on AI regulation that I’ll get to in a future post. I’m also adding the results of a few other recent polls.

Again, take these with a grain of salt. They are a snapshot in time and the results of only one poll. Some will seem contradictory, partially because what people say they want and what they do can be different. People will also interpret the questions and choices differently.

That said, these give some excellent indicators of where people are.

First, the methodology. These results are based on an online sample of 1061 respondents fielded over web panels from September 21 to September 22 and weighted to education, gender, race, survey engagement, and 2020 election results. The margin of error is +/- 3.6.

The top tech issues are child online safety, election integrity and privacy. Censorship and content moderation were at the bottom. One interesting thing to note is that Republicans were ten points higher than Democrats to be worried about censorship but about on par when it came to content moderation. Since many on the right have been using censorship a lot more to talk about content moderation, this doesn’t surprise me.

Most people get information on AI and the election from online news websites, TV news, and social media. This is what I expected the results to be and what concerns me about polls showing how much people are nervous about artificial intelligence. Most people are nervous because the news media tells them to be nervous. Now, again, this is where my panic responsibly mantra comes from. There are certainly things we need to be worried about when it comes to AI, but Silicon Valley needs to be very careful not to let the media be the ones to define this technology for the American people before they even use it. We might be past that point already, but it is important for people to know who is shaping perceptions of what to be worried about or not.

The majority of people want to see little or no political content in their feeds. Fifty-four percent would like to see less political content than what they see now, 41 percent would like to see about the same as they do now, and fifty-five percent would like to see less from candidates. There is a lot to unpack here. Clearly, people want less politics. I regret not asking them if they would like to see more or less news in their feed, as that comparison would be interesting, especially if people distinguish wanting content from candidates in their feed versus news content about the election.

This poll from Pew is worth considering, as the number of people using most platforms who get news from them has increased—even on Meta platforms, which have reduced the reach of news and politics. I have a feeling how people define political content is nuanced.A few other interesting results from the poll here:

Most people want to see diverse perspectives in their feeds, while 24 percent want to see content with similar views.

Sixty-nine percent say platforms should not highlight political content.Over 90 percent of people are okay with companies reminding people to register to vote and when Election Day is. Seventy-four percent are okay with platforms taking action on campaign accounts. Platforms are in the clear to help people register to vote and inform them of Election Day. After that, things get a little more murky - especially regarding campaign accounts.

While 74 percent of people are fine with platforms taking action on candidates, even 63 percent of Republicans said it was okay. That is still far less than the 82 percent of Democrats, but still a majority.

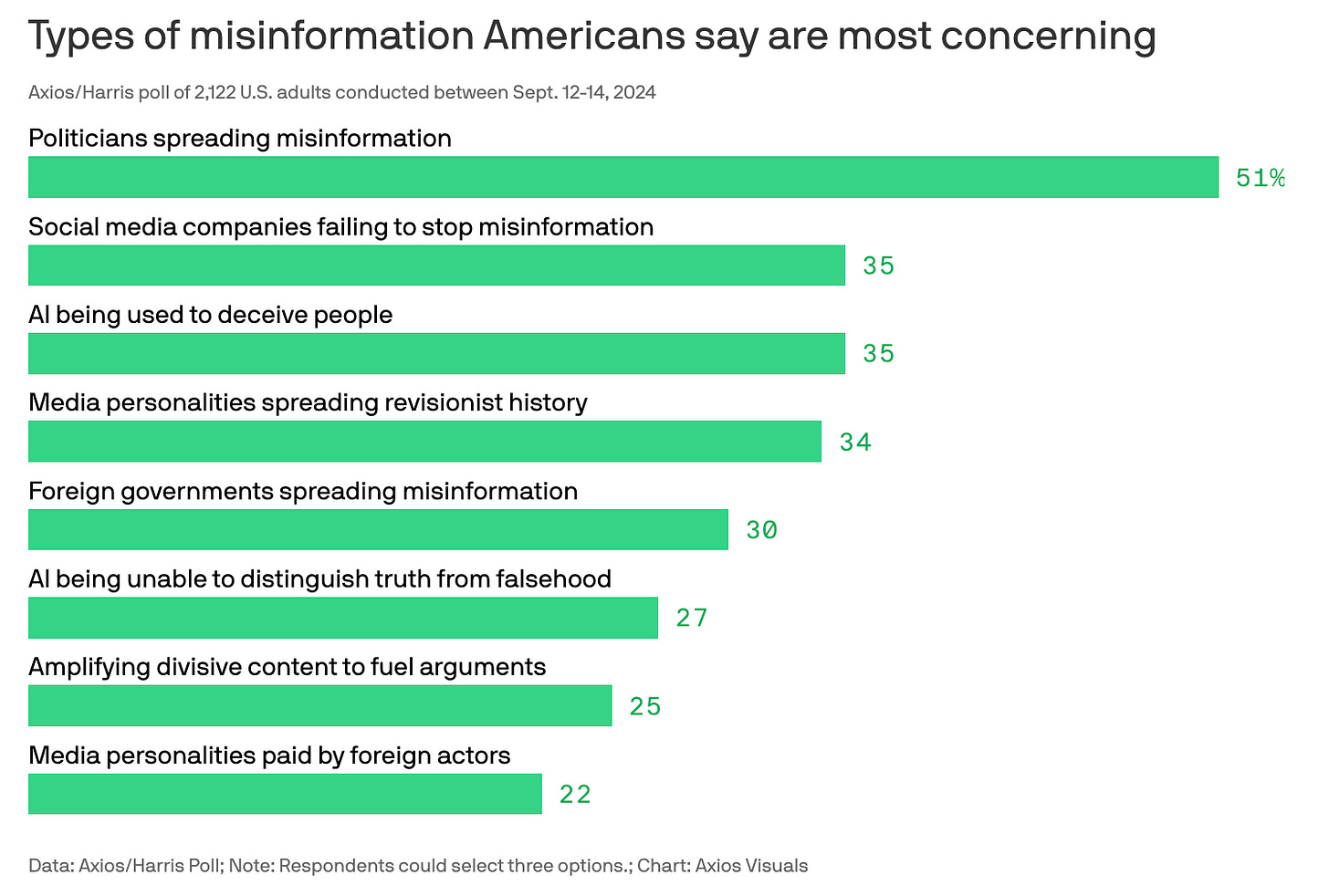

This is interesting when you consider this poll from Axios last week, which showed that misinformation by politicians themselves is people’s top concern, with a second being that social media companies fail to stop misinformation. Our poll showed that 62 percent of people didn’t trust the government or private companies to make content moderation decisions. I’d love to know if Axios dug into this anymore about what people expect social media companies to do to stop misinformation and if that includes action on candidate accounts. Our poll suggests that people are okay with it even though they don’t trust the platforms to do so.

I knew there would be no clean answer for companies on what to do on this front and these numbers show that.I should note that very few people were okay with platforms selling political ads. In the BPC survey last year, we also found that very few people were okay with platforms selling political ads, but very few found it unacceptable. The vast majority didn’t care. We didn’t give them a don’t care option in this poll, and we probably should have.

Eighty-four percent of people think influencers should disclose if they are being paid and want to know if content was generated by artificial intelligence. As the use of influencers grows - not just by campaigns but by all brands - people want to know who is being paid or not. This is an area the FEC has done nothing in, and the FCC has failed to police. It’s hard to enforce and track when and where money is exchanged. It's even trickier when it’s not a monetary exchange but other things of value like access and parties.

My takeaways from these results are:

The media is incentivized to discuss how artificial intelligence might go wrong. Companies and others need to do more than just talk about AI's positive use cases; they need to help people learn to use it so they can see the positive and negative use cases.

Despite all the coverage, censorship and content moderation are not at the top of issues people care about. I think the topic makes for good headlines and to raise money, but we should keep people’s level of concern in check.

Companies should do more to explore the nuance of what types of political and news content people want in their feeds. This likely means giving them more choice, but they should not be amplifying it unless it is information about registering to vote or when Election Day is.

Platforms will just have to make a judgment call about taking or not taking action on candidate accounts and recognize that a segment of the population will consider either decision political. There is no winning here. The best they can do is decide now what their values will be when making such a decision and explaining why they did so.

Influencers need to disclose more when they are paid, and the government needs to require campaigns to share more when they work with influencers.

None of this will be solved in the next month. These issues will continue regardless of who wins this election. It would behoove all of us to continue to investigate these nuances of what people want and when. If you want to start now, Jigsaw presented a fascinating paper at the Trust and Safety Researchers Conference that touched on that exact topic. I know I’ll be digging into it more.

Please support the curation and analysis I’m doing with this newsletter. As a paid subscriber, you make it possible for me to bring you in-depth analyses of the most pressing issues in tech and politics.