Comparing tech election recommendations

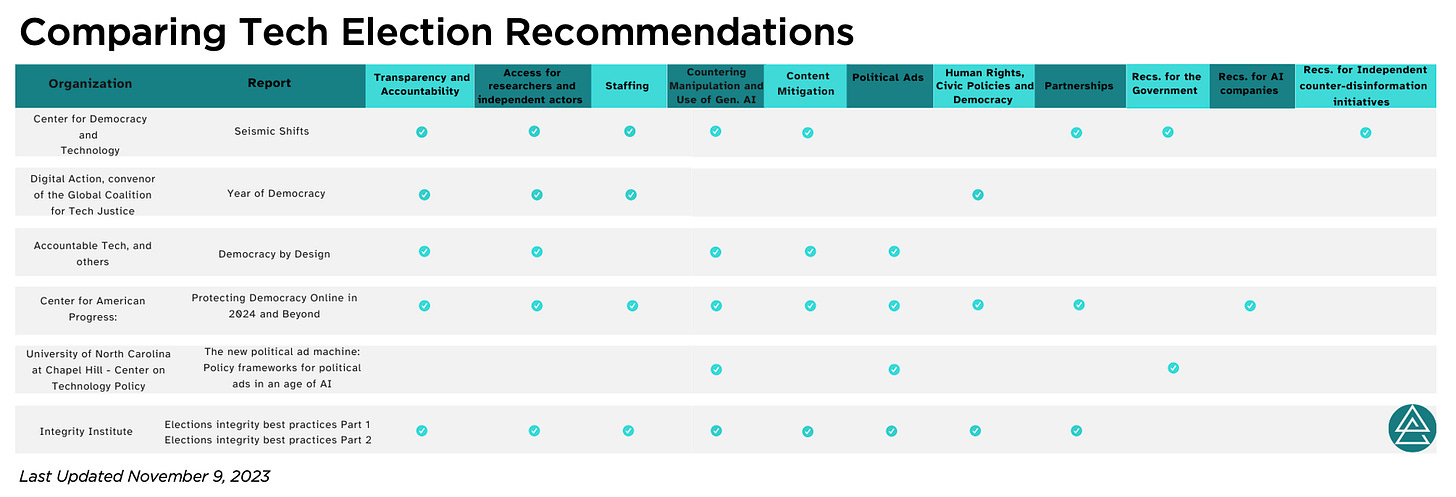

We look at what four civil society organizations think the platforms should do on politics and how those also collectively compare to the Integrity Institute guides.

Today’s piece is co-authored with Ana Khizanishvili.

You might remember my piece at the end of September, where I mentioned all of the tech and elections reports that had come out. I thought that it might be helpful to compare the recommendations across these reports and look at how they compare to the two election integrity guides we put out at the Integrity Institute. I asked Ana to help me in compiling everything.

What follows is a concise analysis of five reports by leading organizations at the intersection of responsible tech and democracy. These reports include:

Seismic Shifts: How Economic, Technological, and Political Trends are Challenging Independent Counter-Election-Disinformation Initiatives in the United States by Center for Democracy and Technology

Year of Democracy by Digital Action, convenor of the Global Coalition for Tech Justice

Democracy by Design by Accountable Tech, and its partners

Protecting Democracy Online in 2024 and Beyond by Center of American Progress.

The new political ad machine: Policy frameworks for political ads in an age of AI by the Center on Technology Policy of the University of North Carolina at Chapel Hill

They offer recommendations for various stakeholders, including platforms, AI companies, government bodies, and civil society.

Please support the curation and analysis I’m doing with this newsletter. As a paid subscriber, you make it possible for me to bring you in-depth analyses of the most pressing issues in tech and politics. It’s your last day to take advantage of my birthday special of 20 percent off an annual subscription!

To facilitate a broad understanding and identify commonalities and gaps, we've categorized the recommendations:

Transparency and Accountability: All reports emphasize the need for greater transparency and accountability on the part of platforms. This involves standardizing transparency reports, including reports related to "election seasons," disclosing election-related policies, establishing oversight mechanisms, and ensuring independence from government influence.

Access for Researchers and Independent Actors: All reports also call for greater access to platform data for researchers. Recommendations include growing partnerships with fact-checkers and independent organizations to protect electoral integrity, including providing direct data access for research, maintaining tools like CrowdTangle, offering free APIs, complying with the Digital Services Act, and conducting election-specific studies.

Platform Staffing: Adequate platform staffing, especially trust and safety teams during election processes, is a consistent theme across the reports. The reports recommend reinvesting in trust and safety teams, including teams dedicated to elections, and ensuring staff is operational throughout election processes.

Countering Manipulation and Use of Gen. AI: Reports warn towards the potential risks of generative AI for election integrity. Recommendations call for prohibiting the use of generative AI or manipulated media in policy and practice and auditing machine learning content classifiers for political bias.

Content Mitigation: The reports focus on measures to counter manipulation, misinformation, and disinformation. Recommendations include using "virality circuit breakers" to combat disinformation, halt algorithmic amplification and rampant resharing, flagging fast-spreading posts, and tools for fact-checking and auditing civil graphs and content, extending warning screens, and upranking authoritative information.

Political Ads: Two reports specifically address platform policies and practices related to political ads, suggesting the adoption of strong standards, clear disclosure of AI use, and timely enforcement of ad policies.

Building Partnerships: Half of the reports stress the importance of partnerships. They call for platforms to appoint liaisons for civil society, develop civic engagement products, and expand collaborations with election authorities.

Human Rights and Civic Politics: The reports highlight the need to uphold international human rights standards, provide robust human rights impact assessment reports, and standardize election period risk assessments, including applying learnings to anticipate political shifts.

We know this graphic is pretty hard to read. We’ve put a version here that allows you to zoom in more.

Isolated reports provide recommendations directed at specific stakeholders:

The Center for Democracy and Technology's report encourages the government to utilize trust and safety expertise, further support the development of the field, and be transparent about its role in countering election disinformation. The report also calls on civil society, research institutions, and funders to develop resources to combat coordinated attacks against researchers, shift focus to year-round harm reduction strategies, and concentrate on mitigating the impact of disinformation "superspreaders."

The Center on Technology Policy of the University of North Carolina at Chapel Hill takes a different view. It centers its recommendations solely on the government's responsibility to mitigate election harm and the use of generative AI. The report underlines the importance of digital literacy programs, detecting and mitigating bias in political ads, specifically those introduced through GAI models. The report also includes recommendations for the government to promote learning about GAI through funding research, assessing impact, and incorporating those learnings into policy.

The Center for American Progress offers recommendations for AI companies, including creating election-specific policies and transparency in enforcing usage policies and democratizing LLMs for content moderation, especially for content generated by their AI models.

These reports collectively serve as valuable guides for platforms, governments, civil society, and AI companies to better prepare for the upcoming elections boom of 2024.

Recommendations have also been collectively compared to Integrity Institute's Election Integrity Best Practices guides. These guides take a slightly different approach from other reports. The main difference detected through the process is the detailed nature of the content in both documents. While the external reports collectively provide detailed and insightful recommendations, the guilds go beyond simple recommendations and offer a breakdown of the reasoning and concrete solutions.

The other big difference between the Institute guides and the others is providing guidance for how elections work differently in places around the world, dealing with election commissions that might not be unbiased, and frameworks for how platforms might prioritize work across countries and different product areas. These tradeoffs are some of the most challenging platforms face and an area where civil society and others could do more to help point out which election issues platforms should tackle ahead of others.

As tech companies continue to share their plans for the 2024 elections, we will be keeping track of how they align with these recommendations. If there are any reports in this area that we missed or that you will be releasing, please let us know so we can add them!

Please support the curation and analysis I’m doing with this newsletter. As a paid subscriber, you make it possible for me to bring you in-depth analyses of the most pressing issues in tech and politics. It’s your last day to take advantage of my birthday special of 20 percent off an annual subscription!