Please consider supporting this newsletter. For $5 a month or $50 a year, you’ll get full access to the archives and newsletters such as this one that is just for paid subscribers and support my ability to do more research and writing on issues at the intersection of technology and democracy.

Hello from the Acela train from Washington DC to New York City. I’m headed north again to spend four days with the Integrity Institute staff to do strategic planning for the rest of the year. It’s hard to believe that we are already in May. 2024 will be here before we know it.

Last week, I ran a poll on my LinkedIn profile asking people to vote on what topics they wanted me to cover next in this newsletter. Seventy-five people voted, with 48 percent wanting to hear more about how artificial intelligence might impact politics.

This is a topic I expect to cover a lot over the next few years. It’s hard to know all the ways in which it might be deployed - but consider this a start. Also, spoiler alert. I don’t think 2024 will be the last human election, nor do I think we need to prepare for the end of the world. Read on for my predictions and why we need to dial down the panic machine a little.

I thought the RNC was pretty clever when they responded to Joe Biden’s re-election announcement with an AI-generated ad. It was a textbook example of how to get a ton of free coverage without spending a lot of money because it was using a new technology people couldn’t stop talking about.

It reminded me of how obsessive campaigns were in the late 2000s/early 2010s to look innovative and utilize the latest technology. They wanted to look cutting-edge and cool, and the media fawned over it.

Though in this day and age, the angle is not about how innovative they are but how scary it is that it can be done. Members of Congress introduced bills to require disclosure when AI is used for political ads. The American Association of Political Consultants condemned using deceptive generative AI content in ads.

While transparency is good, we need to consider how these tools might be used for good and bad before we start making declarative statements. Here’s my attempt to do just that in my favorite format - a grid. 😀

As soon as I hit send on this newsletter, I’m sure I will think of more ways AI could be used in politics, but this is a start. What did I miss? Let me know.

One thing in addition to the areas above is how these platforms will handle political prompts overall. I’m working on a comprehensive analysis of that, hoping to have it done in the next few weeks. But a few things I’ve noticed thus far:

Open AI is one of the few services to have specific policies about politics but also one of the few to actually allow prompts such as “write a blog post about the importance of voting.

When I put the same prompt into Google’s workplace tool I got the following error:

When I asked Bing’s image generator to create a picture of a robot campaigning for president, I got this error:

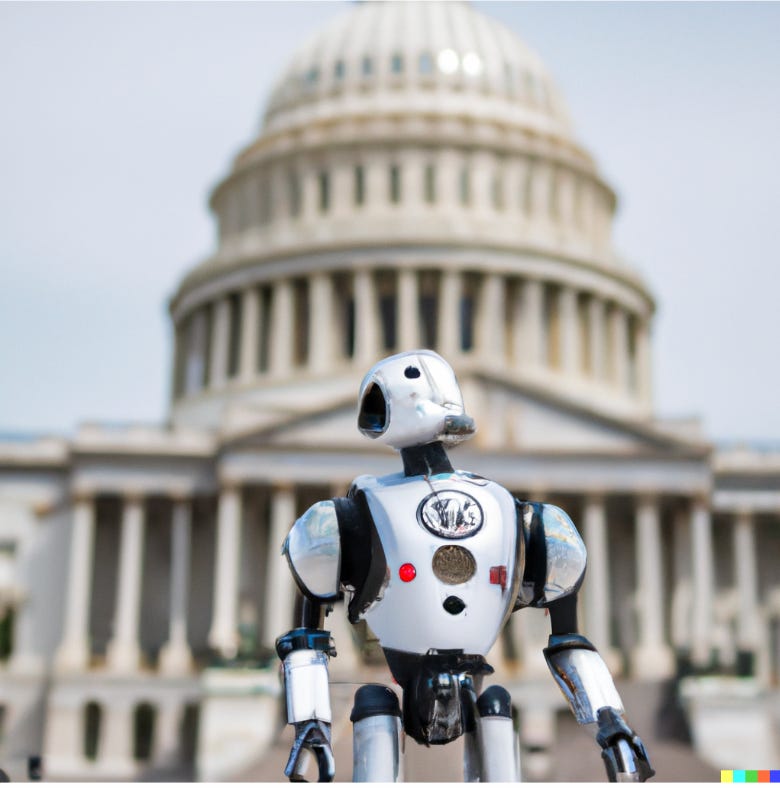

But when I asked Open AI’s DALL-E to make me an image of a robot in front of the capitol, I got this:

This is still early days, and I hope these tools don’t block all of this forever because, selfishly, I’d like to use more of these tools for my work. Given that a lot of my stuff is on elections, if it’s fully banned, they won’t be as useful to me.

That said, I’m excited to think through these challenges more and how AI might be deployed for politics in responsible ways. I don’t think just banning it is the answer because these tools could go a long way in helping candidates and organizations who don’t have the resources to scale up.

This brings me to dialing down the panic machine.

A couple of weeks ago, I was chatting with Micah Sifry about an issue of his newsletter called

titled “Clowns to the Left, Jokers to the Right” which looks at Tristan Harris’ new panic-inducing video - the AI Dilemma.Micah and I don’t always agree, but his critique of Harris was spot on. I encourage you to read it. In our exchange, Micah asked me where I was on the panic/no panic AI scale. I wrote back that I was center no panic. Let me explain.

On one end of the spectrum, you have the people absolutely panicking about AI. In addition to the AI Dilemma video, you have Jonathan Haidt’s latest in the Atlantic with Eric Schmidt. There’s the open letter asking for a six-month pause or the AI pioneer leaving Google to warn people about the rapid development of this technology.

These folks are exhausting and not at all practical about mitigating the concerns. I mean, come on - calling 2024 the last human election on the premise that whoever wins going forward will be the candidate with the strongest computing power? Even in the video, they admit that a campaign's effective use of data and technology has already been shown to impact elections. I think it’s true that the campaign that learns to use AI will have a leg up, but technology alone does not win and lose elections. The candidate and his/her message still matter a lot. I don’t think AI changes that.

Nirit Weiss-Blatt also has a great breakdown in TechDirt on this panic-as-a-business.

On the other end, you have the people building these tools. And while it is easy to throw around accusations that these folks only care about profits - I find that many I talk to are really trying to find the balance between putting the tools out safely but also recognizing you can’t account for every single bad thing that might happen before you deploy it. If you want a sense of how one team thinks about this, the Integrity Institute podcast - Trust in Tech - just released an episode with two people at Open AI working on trust and safety.

There are some real concerns and bad things that AI might unleash. Those on the panic end of the scale aren’t wrong about some of these risks. We should go into that eyes wide open - which I think we are. Thanks to us learning the lessons of thinking about these things too late in the world of social media, we are now having these conversations so much earlier in the deployment of these tools. That’s a good thing.

Moreover, while AI will be disruptive, it will also be for good. As a one-woman show at Anchor Change, the ability to use tools like Otter.ai to transcribe audio or ChatGPT to format the article links at the end of this newsletter saved me so much time. I’m playing with Beautiful.ai to create slide decks. I’ve gotten access to Google’s AI tools for the workplace and Bing’s image generator. The ability to picture something in my head, plug that in as a prompt, and seconds later have that image in front of me is mind-blowingly cool. Here’s an example of one I did the other week with the prompt, “Path along a river at dusk in an evergreen forest with fireflies and a bear watching and lantern hanging on a branch.”

This is why I’m center no panic. I think we will evolve with this technology. Yes, we don’t know every way it might go wrong, but we don’t know that about any innovation. This technology is out in the world, and we can’t put the genie back in the bottle. All we can do is our best to do so responsibly - something nearly everyone has very much front of mind.

What I’m Reading

New York Times: Unions Representing Hollywood Writers and Actors Seek Limits on A.I. and Chatbots

The Verge - The United Kingdom's Online Safety Bill, Explained

Reuters - Latin American Election Influence Operation Linked to Miami Marketing Firm

Reuters - Google, Meta executives push back against Canada online news bill

The Information - Nearly Half of YouTube's U.S. Viewership is Now on TVs, Helping Drive Ad Shift

Harvard Magazine - Artificial Intelligence and Authoritarian Regimes

Freedom House - Election Watch for the Digital Age

Nextdoor - Nextdoor is Integrating Generative AI to Drive Engaging and Kind Conversations in the Neighborhood

Microsoft Blog - Announcing the Next Wave of AI Innovation with Microsoft Bing and Edge

Job Board

Google Careers - Rapid Response Director, YouTube Trust and Safety

Calendar

🚨 NEW 🚨

May 10, 2023 - Google I/O Conference

May 30, 2023 - Atlantic Council Report Launch: Telegram, WeChat and WhatsApp useage in the United States

August 10 - 13, 2023 - Defcon

Topics to keep an eye on:

Facebook 2020 election research

TV shows about Facebook - Doomsday Machine and second season of Super Pumped

May 10 - 12, 2023 - All Things in Moderation Conference

May 10, 2023 - Google I/O Conference

By May 12 - Meta response on spirit of the policy decisions

May 14, 2023 – Thailand election

May 14, 2023 – Turkey election

May 15-16: Copenhagen Democracy Summit

May 13 and 27, 2023 – Mauritania election

May 21, 2023 – Greece election

May 21, 2023 – Timor-Leste election

May 24-26, 2023 - Nobel Prize Summit: Truth, Trust and Hope

May 30, 2023 - Atlantic Council Report Launch: Telegram, WeChat and WhatsApp useage in the United States

May: EU-India Trade and Technology Council meeting in Brussels

June 4, 2023 – Guinea Bissau election

June 5-9 - RightsCon

June 5 - 9 - WWDC - Apple developer event

June 5, 2023 - The European Commission, European parliament and EU member states are due to agree a final definition for political advertising

June 11, 2023 – Montenegro election

June 19, 2023 - Meta response due on COVID misinfo

June 24 - June 30 - Aspen Ideas Festival

June 24, 2023 – Sierra Leone election

June 25, 2023 – Guatemala election

TBD June: DFR Lab 360/OS

July 11-13, 2023 - TrustCon

July 2023 – Sudan election (likely to have further changes due clashes erupted mid-April, despite temporary humanitarian ceasefire,)

July 23, 2023 – Cambodia election

July or August 2023 – Zimbabwe election

August 10 - 13, 2023 - Defcon

August-2023 – Eswatini election

August 2023 - First GOP Presidential Primary Debate

Mid-September: All Tech Is Human - Responsible Tech Summit NYC

September 27-29, 2023: Athens Democracy Forum

September 28-29, 2023 - Trust & Safety Research Conference

Thanks for the shout out and nice to be on the same side of an argument! I think your pro/con chart is pretty good, though some of the cons (especially related to breaking out ability to trust content) seem weightier than some of the pros, even if it takes the same number of words to describe each. ;>) If the new AI tools turn out to be as disruptive as predicted, the biggest impact on politics may be from how much the rapid pace of change upsets people (especially ones who suddenly find themselves out of a job) and where they decide to aim their anger.