Platforms and Governments - It’s Complicated

A little insight into how tech companies and government officials engage

A happy Sunday afternoon to you from rainy Washington, DC. I’m feeling a little guilty that I’m only now getting to writing this week’s free newsletter. After three weeks of travel that had me going from Sweden to Jordan to LA to Georgia to NYC, I spent 10 hours yesterday catching up on some work that I had pushed off. I’m exhausted and thus allowed myself to have a lazy morning. I still feel guilty for being lazy, which is very type-A, workaholic American of me. 😂

Good thing I have this coffee cup to keep my priorities straight.

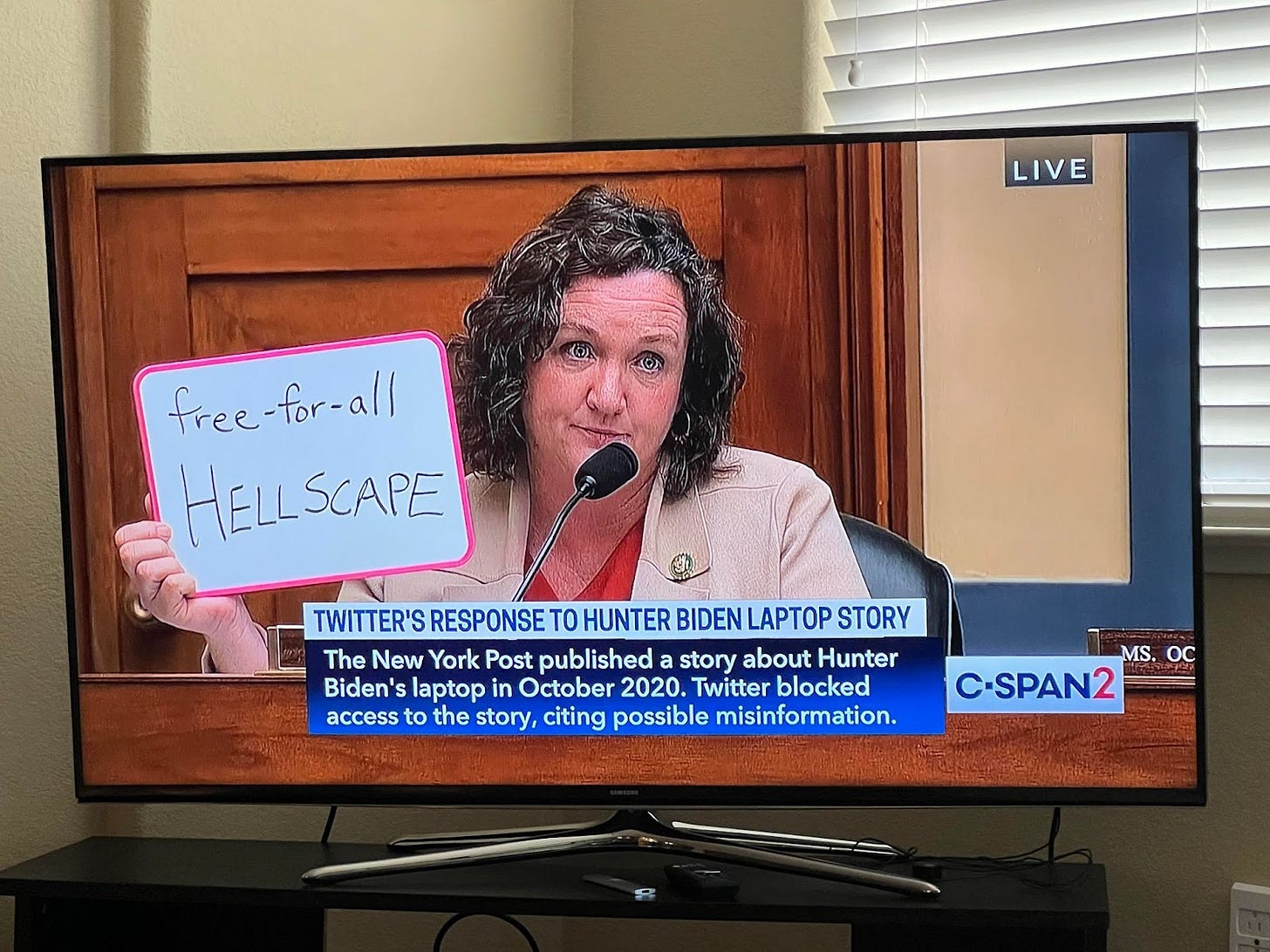

On Wednesday, I unpacked the Congressional hearing with the former Twitter executives that covered everything from how they handled the story about Hunter Biden’s laptop to how they made decisions about what content to remove or reduce the reach of to the role of government in pressuring companies on how they handle content on their platforms.

Today I thought I’d dig in a bit more about how companies engage with government actors, the existing tensions, and how they try to navigate various tradeoffs.

My experience is closely tied to my work at Facebook, though I have a pretty good understanding of how other companies are structured. That said, I’m making some generalizations below, and I’ll try to point out where there are differences, but know that there could be some nuances I’m missing.

First, let’s look at the different teams that might engage with government officials:

Public Policy - These folks regularly engage with lawmakers and their staff. In the United States, they have to report how much they spend on lobbying the Federal government and, I believe, in various states.

Content/Product Policy - These are the people who write the platform's policies - what’s allowed and not. They don’t regularly engage with government officials but, from time to time, might be involved in a meeting to help explain the platform rules. For instance, Monika Bikert - the head of this team at Meta - testified at a Senate hearing in April 2021.

Customer Support Staff - This was the team that I helped build at Facebook. We were the ones who worked with politicians and governments on how to best use the platform. We focused on helping them use the free tools, and another team helped them with ads. You can read more about Meta’s work in this space here.

Legal - The lawyers were the ones who worked directly with law enforcement and governments on requests for information or for the company to take specific actions. Meta, Google, Twitter, and many other platforms generate transparency reports to share how many of these requests they get and how many they fulfill. Meta publishes its operational guidelines for law enforcement officials seeking records here.

Threat Intel/Security - These teams are looking for threat actors on the platforms and protecting the platform from activities such as hacking. They sometimes roll up into a broader trust and safety organization or are a stand-alone team. This team would engage with the FBI or other government officials who might be sharing tips on problematic activity. Meta and other companies publish reports when they do these takedowns.

Next, let’s look at the org chart for these teams. This does depend by company because, at some, the public policy, content policy, customer support, and legal teams all report to the same person. At some, it’s just public policy, content policy, and customer support teams. Threat intel/security might be broken up amongst different teams too. While we could ask each company to publish their org charts, I don’t think that tells us much.

The biggest concern people have is the decision-making process within these companies and who makes the final call on the rules and when someone has violated them - especially high profile situations like Trump’s removal or suppression of the Biden laptop.

Some strongly feel that the people writing and enforcing the rules (the content policy team) should not report to the same person the public policy team reports to. They are concerned that political considerations will be considered when determining if content should be actioned.

That is a valid concern, but I don’t think you solve it by changing the org chart. At both Facebook and Twitter, these teams report to the same person. Gadde said in her opening statement that she “had many distinct teams reporting to me, including legal, trust & safety, public policy, corporate security, and compliance.” Eventually, these teams must report to a common person, whether a Vice President or the CEO.

I think that most companies have taken great care to ensure checks and balances in their decision-making processes. There should be oversight on those checks and balances, but I don’t think there is an ideal org chart. What I think is fair is asking those leaders how they take political considerations into account when making decisions. We can dream of a world where political considerations aren’t taken into account, but that’s not reality because, as we saw Wednesday - you might get dragged in front of Congress - or in some countries, thrown in jail - if governments don’t like your decisions.

That takes us to how these companies engage with government entities. The day-to-day is relatively routine and mundane. The public policy teams will meet with lawmakers to answer their questions on various issues. For instance, this week, the European Commission published the latest reports from the companies on how they are complying with the Code of Practice on Disinformation. The public policy teams likely would have led negotiations for that updated code.

The customer support teams answer questions from government entities about how products work or file bugs if something is broken. Or they might be doing cool partnerships with NASA to allow people to experience being on the moon via Oculus.

Legal teams are navigating law enforcement requests for information, and the threat intelligence teams might be getting tips about the problematic activity.

What happens when a government or political entity flags potentially problematic content to a platform? First, all platforms have various processes in place to evaluate the request. So, just because a government wants something taken down is not automatic. It’ll need to be evaluated to see if it violates the law or platform policies. Maybe they didn’t go through the proper steps to request data. Maybe the content doesn’t violate. Roth on Wednesday said Twitter separated the teams who would get the asks versus those who would evaluate it. Now, in tricky situations where the answer isn’t clear, it would likely get moved up higher in leadership for someone to make a call.

How much pressure governments put on companies to take action can also vary. It’s not great when the President of the United States says your platform is killing people. Getting grilled by Members of Congress who are threatening criminal action is not fun - and yeah, that’s going to be in the back of the mind of anyone making these calls. Some governments, like India and Turkey, have passed laws to compel companies to do what the government says. Before Musk’s takeover, Twitter was pushing back on the Indian government in court.

So, in the end, as you can see, it is complicated. I’ll keep saying over and over that we should be debating how companies make decisions and the role of government, but let’s make sure to have the facts of what is happening and not jump to conclusions to make a political point. Otherwise, as Rep. Jamie Raskin called it Wednesday, we’ll stay on this wild cyber goose chase.

PS: Bonus content from Wednesday. If you didn’t watch the hearing Wednesday, various Democrats had different approaches to what they were witnessing. My friend Krista particularly enjoyed Rep. Katie Porter’s rant and proudly sent me this picture that she requested be included in this newsletter.

I’ve started a paid version of this newsletter! For $50 a year or $5 a month, you’ll get exclusive content and full access to the archives. Please consider upgrading! Your support allows me to spend more time researching and writing.

What I’m Reading

Tech Policy Press: Evaluating Cries of Censorship on Capitol Hill

The Lawfare Podcast: A Jan. 6 Committee Staffer on Social Media and the Insurrection

Modern Diplomacy: Defending Democracy Against a Rapidly Evolving Internet Landscape

The Daily Show: Chelsea Handler Discusses Being Childless by Choice - Long Story Short

Bloomberg: TikTok Reveals Russian Disinformation Network Targeting European Users

New York Times: Steve Bannon’s Podcast Is Top Misinformation Spreader, Study Says

New York Times: Free Speech vs. Disinformation Comes to a Head - The New York Times

New York Times: Why Are You Seeing So Many Bad Digital Ads Now?

European Commission: Signatories of the Code of Practice on Disinformation deliver their first baseline reports in the Transparency Centre

Jobs Board

Reddit: EU/UK Policy Lead

Atlantic Council: DFR Lab Digital Sherlocks Applications Open - Deadline February 15

Mozilla Foundation: Director of Campaigns

Ford Foundation: Program Associate

Epic Games: Policy Lead, Trust and Safety

Institute for Rebooting Social Media: Governance in Online Speech Leadership Series: Apply today! - Deadline February 24

Calendar

🚨 New! 🚨

March 2, 2023 - 2023 V-Dem Democracy Report Launch

Topics to keep an eye on:

YouTube Decision on Trump Reinstatement

Facebook 2020 election research

TV shows about Facebook - Doomsday Machine and second season of Super Pumped

February 16, 2023 - Platforms have to announce EU numbers to comply with DSA

February 21, 2023 - SCOTUS hears Gonzalez v Google

February 22, 2023 - SCOTUS hears Twitter v. Taamneh

February 23, 2023 - Nigeria Election

Feb 23, 2023 - Meta response to cross-check due

February 23 - 24: Designing Technology for Social Cohesion

February 2023 - Djibouti Election

February 2023 - Monaco Election

March 1, 2023 - All Tech is Human: Tech & Democracy: A Better Tech Future Summit

March 2, 2023 - 2023 V-Dem Democracy Report Launch

March 5, 2023 - Estonia Election

March 10 - 19: SXSW (Here’s the panel I’ll be on!)

March 23, 2023: TikTok CEO Congressional Hearing

March 20 - 24, 2023: Mozilla Fest

March 29 - 30, 2023: Summit for Democracy

March 2023 - Antigua and Barbuda Election

March 2023 - Federated States of Micronesia Election

March 2023 - Guinea Bissau Election

March 2023 - Sierra Leone Election

April 30, 2023 - Benin Election

April 30, 2023 - Paraguay Election

April 2023 - Andorra Election

April 2023 - Finland Election

April 2023 - Montenegro Election

May 7, 2023 - Thailand Election

May 15-16: Copenhagen Democracy Summit

June 5-9: RightsCon

June 24 - June 30: Aspen Ideas Festival

June 25, 2023 - Guatemala Election

June 25, 2023 -Turkey Election

TBD June: DFR Lab 360/OS

July 2023 - Cambodia Election

July 2023 - Timor-Leste Election

July 2023 - Zimbabwe Election

August 6, 2023 - Greece Election

August 2023 - Eswatini Election

September 27-29, 2023: Athens Democracy Forum

TBD September: Atlantic Festival

TBD September: Unfinished Live

TBD September: Trust Con and Trust/Safety Conference (If they do them again)

September 2023 - Mauritania Election

October 8 - 12: Internet Governance Forum - Japan

October 10, 2023 - Liberia Election

October 12, 2023 - Pakistan Election

October 14, 2023 - New Zealand Election

October 22, 2023 - Switzerland Election

October 2023 - Argentina Election

October 2023 - Luxembourg Election

October 2023 - Oman Election

November 12, 2023 - Poland Election

November 20, 2023 - Marshall Islands Election

November 29, 2023 - Ukraine Election

November 2023 - Bhutan Election

November 2023 - Gabon Election

November 2023 - Rwanda Election

December 10, 2023 - Spain Election

December 2023 - Bangladesh Election

December 2023 - Democratic Republic of the Congo Election

December 2023 - Togo Election

TBD - Belarus Election

TBD - Cuba Election

TBD - Equatorial Guinea Election

TBD - Guinea Election

TBD - Madagascar Election

TBD - Maldives Election

TBD - Myanmar Election

TBD - Singapore Election

TBD - South Sudan Election - (Unlikely to happen)

TBD - Turkmenistan Election

TBD - Tuvalu

TBD - Haiti

July 15-18, 2024 - Republican National Convention