The Degree of Difficulty Gap

How tech and its critics are talking past one another - including on elections. Also, what the tech and elections debate is missing.

Two weeks ago I was driving home from my friend’s place on the Eastern Shore - Kent Island to be precise - and I turned on an older podcast of Malcolm Gladwell and Adam Grant talking about their then-new books.

As I was driving over the bay bridge Gladwell said to Adam that the sport of diving - something apparently Adam is very good at - gave us the world a gift in the concept of degree of difficulty to help measure the technical difficulty of skill, performance, or course. If a light blub could have gone on above my head it would have. I had a eureka moment where what Gladwell was saying was giving me a way to explain something that had been bothering me for a while in the debate around tech.

That is - we don’t talk enough about how hard it is for the platforms to actually execute the policies that they have. They have set expectations that they are doing things and are on top of them and then everyone gets frustrated when they can do a simple search and find violating content.

The same goes for the platforms’ work around elections. This is obviously a topic near and dear to my heart and something I’m obsessed with. I’m going to try to be as straightforward as possible, but do know some bias could creep in given my role in this work over the years.

Let’s recap where we are right now - not only in the U.S. but around the globe.

First, let’s take a look at what is happening in Kenya - something I’ve seen little news about this week, but I think could be a harbinger of what we might see here in the US in 2024. Kenya went to the polls on August 9 but the election commission didn’t declare a winner until August 15th. When it did four of the seven commissioners refused to validate the vote. Now, under Kenyan law, the commissioner is the only one that matters in certifying the election. Odinga - the candidate declared the loser - can and is challenging it in the courts. International observers are urging calm as we wait for the challenge to work its way through the legal system.

But what do the tech companies do in the meantime? Violence is happening and the threat of more is high. What should they do about content that is questioning the results during this period of time? When I asked people that question this week I got a lot of blank stares and comments about how that’s a really hard question to answer. Yes, yes it is.

In the United States, we had a deluge of announcements over the last two weeks from Twitter, Meta, TikTok, and Salesforce. Google made its announcement quietly last March and updated its political ad policies in May. Spotify quietly announced in May that they were allowing political ads again. Hulu had that dustup over which issue ads they did or didn’t allow and acquiesced to pressure in July that they would allow political issue ads.

Naturally, I started getting a lot of questions from reporters even before these announcements were made on if the companies are ready for the midterms and are they “doing enough.” To help me think through this question I created a chart in addition to the massive database of tech company announcements on elections that looks at the announcements the companies made, what their main points were, and then what their critics were saying. Here’s what I found.

The critics' main pain points tend to be either disagreement on the platform’s policies themselves (ie: fact-checking politicians or not) OR that their enforcement of said policies isn’t up to par because they can still find bad content.

Critics pointed out where the platforms were quieter on topics such as:

If they’ll implement any ranking changes (Meta called them break glass measures in 2020)

If they’ll provide data to researchers

Are they doing enough for elections elsewhere in the world (Many of the platforms released information on what they’re doing in the Philippines, Kenya, and Brazil amongst others this year, but nothing yet about Italy which has its elections in September.)

When will they release various research and surveys we know they’ve done? (looking here at the 2020 research with Meta and Twitter’s world leader survey)

There are twelve companies that made announcements for the US 2020 election that we still haven’t heard from about what they are doing for the midterms. (I made a chart of that too at the bottom of this doc) Most notably YouTube. I imagine we’ll see another round of releases ahead of National Voter Registration Day on September 20.

There’s no conversation happening about what are the telecom companies and others doing about all the spammy text messages. Little to no conversation about how podcast or streaming platforms are thinking about these issues. (BTW this week streaming overtook cable as a way to consume TV) I learned some interesting stats this week about the gaming community - did you know that the average gamer is 34 and 45 percent of US gamers are women?! Very little conversation on how political messages do or do not manifest themselves in those chat rooms.

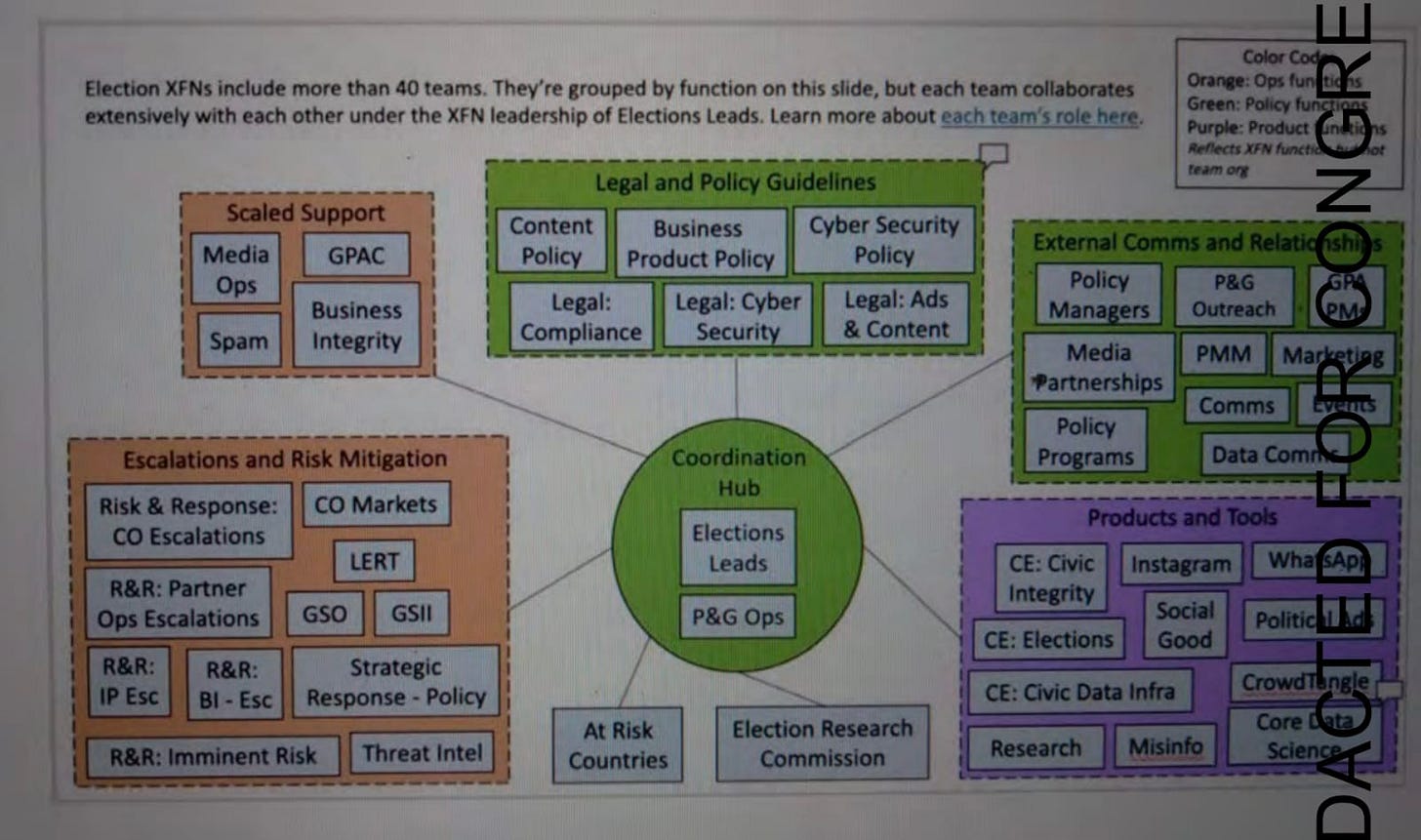

So, both things can be true - many of the platforms can and are doing a lot on things such as combating foreign interference, pushing out authoritative information, political ad transparency, policies against harassment and violence against election officials, labeling, and fact-checking. Just look at the number of teams that work on elections at Meta. This is a slide from the 2019 era that was a part of the documents Frances leaked that my team made.

But it’s also true that they are being quieter on some things and doing some things differently. I would have preferred that they came out and said we did research and evaluation on our work coming out of 2020, we found x, y, and z to be effective, but we found a, b and c not as effective, thus we’re going to keep x, y, and z the same and make some changes to how we do a, b and c. I’m really glad for instance how Twitter put numbers on the effectiveness of their labels. However, Meta says they’ll be deploying labels in a more targeted and strategic way. How is that going to actually work?

This brings me back to the question of the degree of difficulty and transparency the platforms have in how they will actually enforce these policies. It’s something we should talk about more because it’s wrong to take on face value that these companies are going to be perfect at this. For instance, this week TikTok said that paid influencer partnerships in the arena of politics fall under their political ad ban. I know firsthand how hard that is to police on the back end because you are relying on the influencer to disclose said partnership. None of the platforms have the ability to know if money was exchanged to proactively prevent that content from going up. They’ll have to be reactive. But I’m not sure that’s the expectation people have right now when they saw the announcement. No doubt we’ll see some stories in the coming months about how stuff got through.

Moreover, we’ll see reports like this one where some group decides to test a platform’s policies by running purposely violating content. Or like this complaint from Senator Blumenthal about Google and scam ads that fail to take into account why the Google system works the way it does. When it gets through the automated systems right away they then immediately scream about how this proves they aren’t living up to their promises. Or you’ll see ones like this where you do some searches and find violating content. But this fails to take into account how not only do the platforms use a mix of technology and humans for their review processes but they also do some things proactively and others reactively. It fails to take into account how hard this is all to do at scale (Mike Masnik has a great post about this). That’s where platforms could be more transparent and where other stakeholders should be talking about what the right mix is.

So, I remain nervous. I’m nervous that we aren’t thinking about the new threats that might emerge. I’m nervous that these seemingly small changes might have a big impact on execution that we simply can’t realize yet. I’m nervous that we still don’t have the access for researchers, regulators, and others to do the work that they need to do to understand the true impact of what happens on these platforms. I’m nervous that we aren’t talking enough about the other surfaces where the political conversation is happening and I’m nervous that the midterms aren’t going to see any major issues and we’ll be lulled into a false sense of security going into the 2024 elections.

I do appreciate something Nick Clegg said to Bloomberg: “Our work is not finished, there will be things we can’t predict, there will be things that go better and things that go worse. One thing I can assure you certainly as long as I’m doing this job is I will be unflinching and self-critical as to whether we are doing the right thing in roughly the right order.”

Having worked with Nick for a bit while I was still at the company I 100 percent believe him and more people should put themselves in his and every tech executive’s shoes who have to make these hard calls. And then we should have conversations where there is disagreement on those calls and how we try to find compromises.

Speaking of 2024. Meta this week also confirmed that it won’t be moving up its timeline on whether or not to let Trump back on the platform. That’s going to be quite the debate as we go into the new year.

PS: Also some news about me this week. I’ll be a fellow at Georgetown this Fall where I’ll be hosting a discussion group about public policy solutions on the internet’s role in democracy.

What I’m Reading

Lawfare: How Unmoderated Platforms Became the Frontline for Russian Propaganda

The Hill: TikTok pushes back on House official’s warning about use

Bloomberg Twitter Space: Facebook, TikTok Prepare for the Midterms | Social Power Hour

Tech Policy Press: A Menu of Recommender Transparency Options

Stratechery: Instagram, TikTok, and the Three Trends

MasterClass: The entire White House series that now has sessions with President Bush and Condoleeza Rice and Madeline Albright.

Tech Policy Press: “Exhausting and Dangerous:” Is Election Disinformation a Priority for Platforms?

KIRO 7: Facebook parent Meta seeks to kill transparency requirements in Washington’s campaign finance law

The Guardian: Police call for Bolsonaro to be charged for spreading Covid misinformation

HS Today: DOD Releases Social Media Policy to Protect Against Disinformation and Fake Accounts

Think Tanks/Academia/Other

Center for Democracy & Technology: Improving Researcher Access to Digital Data: A Workshop Report

U.S. House Oversight Committee: “Exhausting and Dangerous”: The Dire Problem of Election Misinformation and Disinformation

Sciences Po Paris: Public and Private Power in Social Media Governance: Multistakeholderism, the Rule of Law and Democratic Accountability

Companies

SXSW Panels

It’s SXSW Panel Picker season and there are some great panels you should consider voting for:

Job Openings

U.S. House of Representatives: Software Engineer

Freedom House: Policy and Advocacy Officer or Senior Policy and Advocacy Officer, Technology and Democracy

There are **many** open positions at Freedom House. Check them out here: https://freedomhouse.org/about-us/careers

National Endowment for Democracy: Reagan-Fascell Democracy Fellows Program

Democracy Works: Openings for software engineer and director of HR

Atlantic Council DFR Lab: Variety of positions open. More info at link.

Atlantic Council: #DigitalSherlocks Scholarships

Meta Oversight Board: Variety of positions open. More info at link.

National Democratic Institute (NDI): Variety of positions open. More info at link.

Protect Democracy: Technology Policy Advocate

Calendar

Topics to keep an eye on that have a general timeframe of the first half of the year:

Facebook 2020 election research

Oversight Board opinion on cross-check

Senate & House hearings, markups, and potential votes

August: Angola elections

September 6 - 8: Code 2022 - Vox/Recode Silicon Valley Conference

September 11 - Sweden elections

September 13 - New Hampshire Primary (Hassan defending Senate seat)

September 13 - 27: UN General Assembly

Sept 20 - High level general debate begins

September 21-23: Atlantic Festival

September 27 - 28: Trust Con

September 28 - 30: Athens Democracy Forum

September 29 - 30: Trust and Safety Research Conference

October: Twitter/Musk Trial (Dates not set yet)

October 2 and 30: Brazil

October 15 - 22: SXSW Sydney

October 17: Twitter/Musk Trial Begins

November 8: United States Midterms

March 10 - 19: SXSW

March 20 - 24, 2023: Mozilla Fest

Events to keep an eye on but nothing scheduled: